What is cloud data engineering?

Understanding the basics before jumping to learn how to optimize the cloud is vital.

– IDC

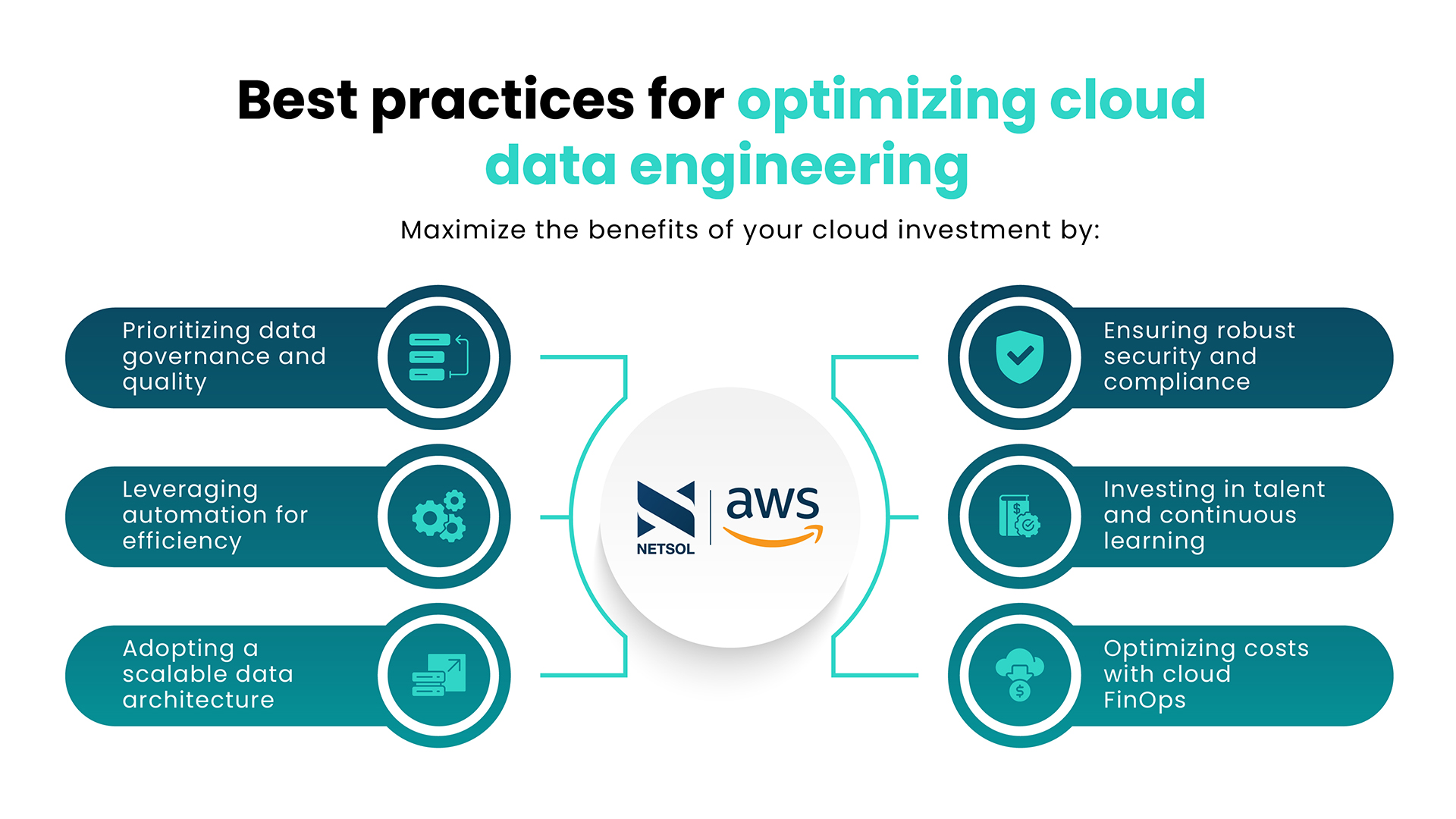

Best practices for optimizing cloud data engineering

Prioritizing data governance and quality

– Gartner

Leveraging automation for efficiency

Adopting a scalable data architecture

– TechRepublic

Ensuring robust security and compliance

Investing in talent and continuous learning

– Rackspace